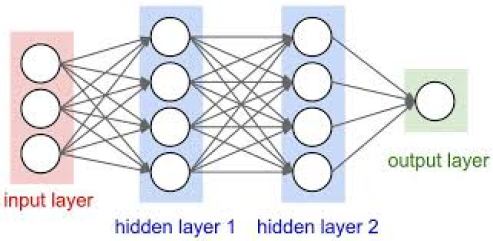

This is a “neural network”. There is an “input layer”, some “hidden layers”, and an “output layer”. Neural networks simulate biological neurons learning of a task or process.

There are many types of neural networks used for solving different types of tasks.

Each connection between neurons has a “weight” attached to it. The input is changed by the weights as it passes through the network with the help of an “activation function” and generates a “prediction”.

During “training” the prediction is compared against the true output, and a score is calculated through a “loss function”.

Using the loss and some calculus, we determine which direction to change each weight through a process called “gradient descent”. The goal of training is to minimize the loss by reaching the lowest point on the curve.

We complete the gradient descent process for the whole network, factor in a “learning rate”, and then update the weights . This step is known as “back propagation” . We repeat this whole cycle many times to train the network.

Once training is complete, input is fed into the network to generate predictions on new examples. Example: Input = House Pictures, Output = Predicted Price.

Resources